I really like the idea of having executable specifications. They can be used as requirements documentation and describe what your stakeholder expects from the software. Besides that, it is also useful as an indication of the progress being made by the development team. But what tools are there for automating acceptance tests?

Fitnesse

One of the most commonly used tools is Fitnesse. This is a wiki in which the tests can be described as tables. These tests can be described by the stakeholder and be mapped to test drivers that are created by the developers. A test driver is a mapping of the acceptance test to the code that needs to be tested, thus making the test executable.

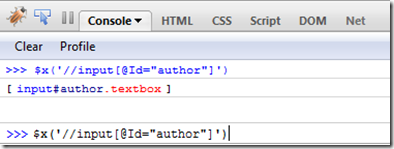

There also is an extension to Fitnesse called Webtest. This actually is a generic test driver for mapping Fitnesse tests to Selenium scripts. If you are not familiar with Selenium, Selenium is a tool for testing web applications. For example, in c# a Selenium script looks like the following:

ISelenium sel = new DefaultSelenium(

"localhost", 4444, "*firefox", "http://www.google.com");

sel.Start();

sel.Open("http://www.google.com/");

sel.Type("q", "FitNesse");

sel.Click("btnG");

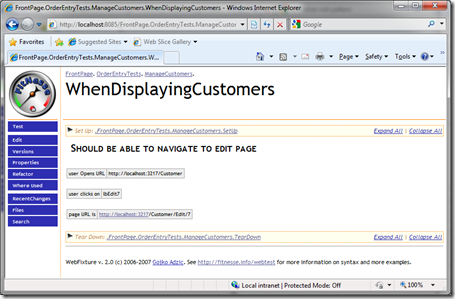

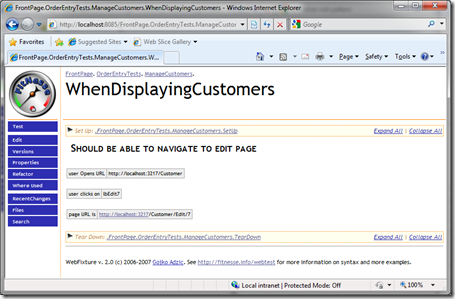

sel.WaitForPageToLoad("3000");The webtest extension for Fitnesse allows you to write acceptance tests like the following:

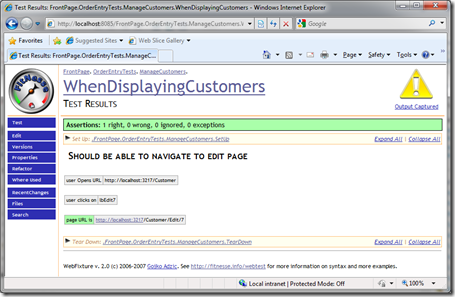

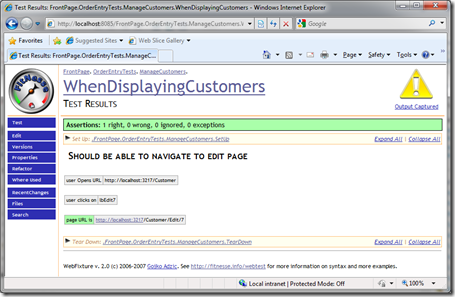

The test can then be executed from within the browser, which results in:

So this is really cool, I really like the ability for the customer to open the tests via a browser and run the tests from the browser. But there are some things about Fitnesse that I don’t really like. As far as I know there is no source control integration and there is some friction in writing the test drivers. Writing the web tests is easy, but sometimes you need to test different functionality, which requires writing custom test drivers / fixtures. Some of these fixtures can become really cumbersome and complex. Besides that, I also think the tests still contain to much technical details. The organization of the tests (think test suites) and also the definition of the tests still contain technical details and are pretty fragile. The customer can easily break the tests. So overall, I really like the fitnesse approach, but there are some shortcomings. So are there any alternatives?

StoryTeller

StoryTeller is a replacement for Fitnesse. The first version of StoryTeller was based on the Fitnesse test engine, but the creator ran into a wall with the Fitnesse test engine. So he decided to reboot the StoryTeller project from scratch and created his own test engine. The progress looks promising, but the project is still very fresh. I’m eagerly following its progress, but to me it looks to immature to be used in a real live project at the moment. However, its creator Jeremy Miller, is a respectable member in the .NET community with an impressive track record, so I would definitely keep my eye on this one.

Twist

Thoughtworks offers a commercial product called Twist, which really is the way to go in my opinion. The user has it’s own user interface for defining the user stories in its purest form. The developers can then map these stories to executable tests from their own development environment. I really like the way this separates concerns. However the product uses Eclipse as a host IDE and the test drivers can only be written in java, which is not an option to me. Now what to use?

What to use

In my opinion, Twist provides the best experience to the end user and makes it really easy for developers to write the test drivers. If twist were to be integrated into visual studio and provided the ability to write test drivers in .NET, I would probably go for Twist. Unfortunately, this is not the case.

I’m also really interested in which way StoryTeller is going. Jeremy Miller just released a sneak preview, but it does not provide any information about how the user will be defining the tests. I hope it will be anything like Twist.

For now, despite its shortcomings, I’m going to continue my work with Fitnesse, because it simply is the most widely used and mature tool out there. However, I’m keeping my eyes open for alternatives.